Improvement of Ethereum has been progressing more and more shortly this previous month. The discharge of PoC5 (“proof of idea 5”) final month the day earlier than the sale marked an vital occasion for the challenge, as for the primary time we had two purchasers, one written in C++ and one in Go, completely interoperating with one another and processing the identical blockchain. Two weeks later, the Python consumer was additionally added to the record, and now a Java model can also be virtually performed. At present, we’re within the strategy of utilizing an preliminary amount of funds that now we have already withdrawn from the Ethereum exodus handle to broaden our operations, and we’re laborious at work implementing PoC6, the subsequent model within the sequence, which options various enhancements.

At this level, Ethereum is at a state roughly much like Bitcoin in mid-2009; the purchasers and protocol work, and other people can ship transactions and construct decentralized functions with contracts and even fairly consumer interfaces within HTML and Javascript, however the software program is inefficient, the UI underdeveloped, networking-level inefficiencies and vulnerabilities will take some time to get rooted out, and there’s a very excessive danger of safety holes and consensus failures. With a view to be snug releasing Ethereum 1.0, there are solely 4 issues that completely have to be performed: protocol and network-level safety testing, digital machine effectivity upgrades, a really giant battery of assessments to make sure inter-client compatibility, and a finalized consensus algorithm. All of those are actually excessive on our precedence record; however on the identical time we’re additionally working in parallel on highly effective and easy-to-use instruments for constructing decentralized functions, contract normal libraries, higher consumer interfaces, mild purchasers, and all the different small options that push the event expertise from good to finest.

PoC6

The main adjustments which might be scheduled for PoC6 are as follows:

- The block time is decreased from 60 seconds to 12 seconds, utilizing a brand new GHOST-based protocol that expands upon our earlier efforts at lowering the block time to 60 seconds

- The ADDMOD and MULMOD (unsigned modular addition and unsigned modular multiplication) are added at slots 0x14 and 0x15, respectively. The aim of those is to make it simpler to implement sure sorts of number-theoretic cryptographic algorithms, eg. elliptic curve signature verification. See right here for some instance code that makes use of these operations.

- The opcodes DUP and SWAP are faraway from their present slots. As an alternative, now we have the brand new opcodes DUP1, DUP2 … DUP16 at positions 0x80 … 0x8f and equally SWAP1 … SWAP16 at positions 0x90 … 0x9f. DUPn copies the nth highest worth within the stack to the highest of the stack, and SWAPn swaps the best and (n+1)-th highest worth on the stack.

- The with assertion is added to Serpent, as a guide method of utilizing these opcodes to extra effectively entry variables. Instance utilization is discovered right here. Observe that that is a complicated function, and has a limitation: if you happen to stack so many layers of nesting beneath a with assertion that you find yourself making an attempt to entry a variable greater than 16 stack ranges deep, compilation will fail. Ultimately, the hope is that the Serpent compiler will intelligently select between stack-based variables and memory-based variables as wanted to maximise effectivity.

- The POST opcode is added at slot 0xf3. POST is much like CALL, besides that (1) the opcode has 5 inputs and 0 outputs (ie. it doesn’t return something), and (2) the execution occurs asynchronously, after all the pieces else is completed. Extra exactly, the method of transaction execution now entails (1) initializing a “submit queue” with the message embedded within the transaction, (2) repeatedly processing the primary message within the submit queue till the submit queue is empty, and (3) refunding fuel to the transaction origin and processing suicides. POST provides a message to the submit queue.

- The hash of a block is now the hash of the header, and never the whole block (which is the way it actually ought to have been all alongside), the code hash for accounts with no code is “” as a substitute of sha3(“”) (making all non-contract accounts 32 bytes extra environment friendly), and the to deal with for contract creation transactions is now the empty string as a substitute of twenty zero bytes.

On Effectivity

Apart from these adjustments, the one main concept that we’re starting to develop is the idea of “native contract extensions”. The concept comes from lengthy inside and exterior discussions in regards to the tradeoffs between having a extra lowered instruction set (“RISC“) in our digital machine, restricted to primary reminiscence, storage and blockchain interplay, sub-calls and arithmetic, and a extra advanced instruction set (“CISC“), together with options corresponding to elliptic curve signature verification, a wider library of hash algorithms, bloom filters, and knowledge constructions corresponding to heaps. The argument in favor of the lowered instruction set is twofold. First, it makes the digital machine less complicated, permitting for simpler improvement of a number of implementations and lowering the chance of safety points and consensus failures. Second, no particular set of opcodes will ever embody all the pieces that individuals will need to do, so a extra generalized resolution could be way more future-proof.

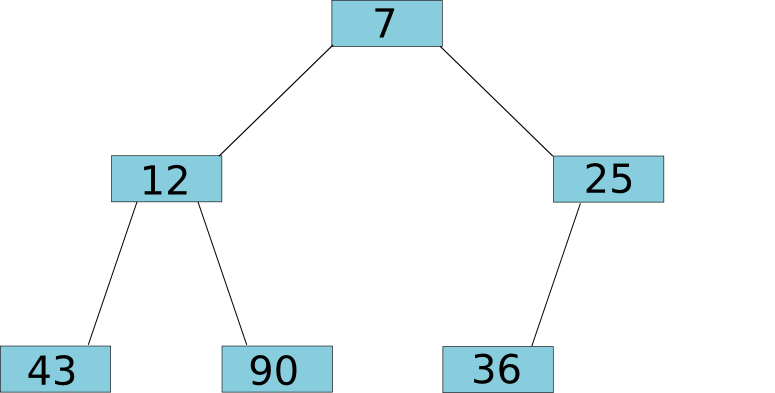

The argument in favor of getting extra opcodes is easy effectivity. For instance, contemplate the heap). A heap is a knowledge construction which helps three operations: including a price to the heap, shortly checking the present smallest worth on the heap, and eradicating the smallest worth from the heap. Heaps are notably helpful when constructing decentralized markets; the best option to design a market is to have a heap of promote orders, an inverted (ie. highest-first) heap of purchase orders, and repeatedly pop the highest purchase and promote orders off the heap and match them with one another whereas the ask value is bigger than the bid. The best way to do that comparatively shortly, in logarithmic time for including and eradicating and fixed time for checking, is utilizing a tree:

The important thing invariant is that the dad or mum node of a tree is all the time decrease than each of its kids. The best way so as to add a price to the tree is so as to add it to the tip of the underside degree (or the beginning of a brand new backside degree if the present backside degree is full), after which to maneuver the node up the tree, swapping it with its dad and mom, for so long as the dad or mum is larger than the kid. On the finish of the method, the invariant is once more glad with the brand new node being within the tree on the proper place:

To take away a node, we pop off the node on the prime, take a node out from the underside degree and transfer it into its place, after which transfer that node down the tree as deep as is sensible:

And to see what the bottom node is, we, nicely, have a look at the highest. The important thing level right here is that each of those operations are logarithmic within the variety of nodes within the tree; even when your heap has a billion objects, it takes solely 30 steps so as to add or take away a node. It is a nontrivial train in pc science, however if you happen to’re used to coping with timber it is not notably difficult. Now, let’s attempt to implement this in Ethereum code. The total code pattern for that is right here; for these the dad or mum listing additionally comprises a batched market implementation utilizing these heaps and an try at implementing futarchy utilizing the markets. Here’s a code pattern for the a part of the heap algorithm that handles including new values:

# push if msg.knowledge[0] == 0: sz = contract.storage[0] contract.storage[sz + 1] = msg.knowledge[1] okay = sz + 1 whereas okay > 1: backside = contract.storage[k] prime = contract.storage[k/2] if backside < prime: contract.storage[k] = prime contract.storage[k/2] = backside okay /= 2 else: okay = 0 contract.storage[0] = sz + 1

The mannequin that we use is that contract.storage[0] shops the dimensions (ie. variety of values) of the heap, contract.storage[1] is the basis node, and from there for any n <= contract.storage[0], contract.storage[n] is a node with dad or mum contract.storage[n/2] and youngsters contract.storage[n*2] and contract.storage[n*2+1] (if n*2 and n*2+1 are lower than or equal to the heap measurement, after all). Comparatively easy.

Now, what’s the issue? Briefly, as we already talked about, the first concern is inefficiency. Theoretically, all tree-based algorithms have most of their operations take log(n) time. Right here, nevertheless, the issue is that what we even have is a tree (the heap) on prime of a tree (the Ethereum Patricia tree storing the state) on prime of a tree (leveldb). Therefore, the market designed right here truly has log3(n) overhead in observe, a fairly substantial slowdown.

As one other instance, during the last a number of days I’ve written, profiled and examined Serpent code for elliptic curve signature verification. The code is mainly a reasonably easy port of pybitcointools, albeit some makes use of of recursion have been changed with loops with a purpose to enhance effectivity. Even nonetheless, the fuel value is staggering: a median of about 340000 for one signature verification.

And this, thoughts you, is after including some optimizations. For instance, see the code for taking modular exponents:

with b = msg.knowledge[0]: with e = msg.knowledge[1]: with m = msg.knowledge[2]: with o = 1: with bit = 2 ^ 255: whereas gt(bit, 0): # A contact of loop unrolling for 20% effectivity achieve o = mulmod(mulmod(o, o, m), b ^ !(!(e & bit)), m) o = mulmod(mulmod(o, o, m), b ^ !(!(e & div(bit, 2))), m) o = mulmod(mulmod(o, o, m), b ^ !(!(e & div(bit, 4))), m) o = mulmod(mulmod(o, o, m), b ^ !(!(e & div(bit, 8))), m) bit = div(bit, 16) return(o)

This takes up 5084 fuel for any enter. It’s nonetheless a reasonably easy algorithm; a extra superior implementation could possibly pace this up by as much as 50%, however even nonetheless iterating over 256 bits is pricey it doesn’t matter what you do.

What these two examples present is that high-performance, high-volume decentralized functions are in some instances going to be fairly tough to jot down on prime of Ethereum with out both advanced directions to implement heaps, signature verification, and so on within the protocol, or one thing to exchange them. The mechanism that we are actually engaged on is an try conceived by our lead developer Gavin Wooden to basically get the perfect of each worlds, preserving the generality of easy directions however on the identical time getting the pace of natively applied operations: native code extensions.

Native Code Extensions

The best way that native code extensions work is as follows. Suppose that there exists some operation or knowledge construction that we wish Ethereum contracts to have entry to, however which we will optimize by writing an implementation in C++ or machine code. What we do is we first write an implementation in Ethereum digital machine code, check it and ensure it really works, and publish that implementation as a contract. We then both write or discover an implementation that handles this activity natively, and add a line of code to the message execution engine which appears for calls to the contract that we created, and as a substitute of sub-calling the digital machine calls the native extension as a substitute. Therefore, as a substitute of it taking 22 seconds to run the elliptic curve restoration operation, it might take solely 0.02 seconds.

The issue is, how can we make it possible for the charges on these native extensions should not prohibitive? That is the place it will get tough. First, let’s make a couple of simplifications, and see the place the financial evaluation leads. Suppose that miners have entry to a magic oracle that tells them the utmost period of time {that a} given contract can take. With out native extensions, this magic oracle exists now – it consists merely of trying on the STARTGAS of the transaction – nevertheless it turns into not fairly so easy when you’ve a contract whose STARTGAS is 1000000 and which appears like it might or could not name a couple of native extensions to hurry issues up drastically. However suppose that it exists.

Now, suppose {that a} consumer is available in with a transaction spending 1500 fuel on miscellaneous enterprise logic and 340000 fuel on an optimized elliptic curve operation, which truly prices solely the equal of 500 fuel of regular execution to compute. Suppose that the usual market-rate transaction payment is 1 szabo (ie. micro-ether) per fuel. The consumer units a GASPRICE of 0.01 szabo, successfully paying for 3415 fuel, as a result of he could be unwilling to pay for the whole 341500 fuel for the transaction however he is aware of that miners can course of his transaction for 2000 fuel’ value of effort. The consumer sends the transaction, and a miner receives it. Now, there are going to be two instances:

- The miner has sufficient unconfirmed transactions in its mempool and is keen to expend the processing energy to provide a block the place the full fuel used brushes towards the block-level fuel restrict (this, to remind you, is 1.2 occasions the long-term exponential shifting common of the fuel utilized in current blocks). On this case, the miner has a static quantity of fuel to replenish, so it needs the best GASPRICE it could possibly get, so the transaction paying 0.01 szabo per fuel as a substitute of the market price of 1 szabo per fuel will get unceremoniously discarded.

- Both not sufficient unconfirmed transactions exist, or the miner is small and never keen or capable of course of each transaction. On this case, the dominating think about whether or not or not a transaction is accepted is the ratio of reward to processing time. Therefore, the miner’s incentives are completely aligned, and since this transaction has a 70% higher reward to value price than most others will probably be accepted.

What we see is that, given our magic oracle, such transactions will probably be accepted, however they may take a few additional blocks to get into the community. Over time, the block-level fuel restrict would rise as extra contract extensions are used, permitting the usage of much more of them. The first fear is that if such mechanisms grow to be too prevalent, and the common block’s fuel consumption could be greater than 99% native extensions, then the regulatory mechanism stopping giant miners from creating extraordinarily giant blocks as a denial-of-service assault on the community could be weakened – at a fuel restrict of 1000000000, a malicious miner may make an unoptimized contract that takes up that many computational steps, and freeze the community.

So altogether now we have two issues. One is the theoretical downside of the gaslimit changing into a weaker safeguard, and the opposite is the truth that we do not have a magic oracle. Luckily, we will clear up the second downside, and in doing so on the identical time restrict the impact of the primary downside. The naive resolution is easy: as a substitute of GASPRICE being only one worth, there could be one default GASPRICE after which an inventory of [address, gasprice] pairs for particular contracts. As quickly as execution enters an eligible contract, the digital machine would hold monitor of how a lot fuel it used inside that scope, after which appropriately refund the transaction sender on the finish. To forestall fuel counts from getting too out of hand, the secondary fuel costs could be required to be at the very least 1% (or another fraction) of the unique gasprice. The issue is that this mechanism is space-inefficient, taking over about 25 additional bytes per contract. A doable repair is to permit individuals to register tables on the blockchain, after which merely check with which payment desk they want to use. In any case, the precise mechanism will not be finalized; therefore, native extensions could find yourself ready till PoC7.

Mining

The opposite change that can probably start to be launched in PoC7 is a brand new mining algorithm. We (nicely, primarily Vlad Zamfir) have been slowly engaged on the mining algorithm in our mining repo, to the purpose the place there’s a working proof of idea, albeit extra analysis is required to proceed to enhance its ASIC resistance. The essential concept behind the algorithm is actually to randomly generate a brand new circuit each 1000 nonces; a tool able to processing this algorithm would have to be able to processing all circuits that could possibly be generated, and theoretically there ought to exist some circuit that conceivably could possibly be generated by our system that might be equal to SHA256, or BLAKE, or Keccak, or some other algorithms in X11. Therefore, such a tool must be a generalized pc – basically, the purpose is one thing that attempted to strategy mathematically provable specialization-resistance. With a view to make it possible for all hash capabilities generated are safe, a SHA3 is all the time utilized on the finish.

After all, good specialization-resistance is unimaginable; there’ll all the time be some options of a CPU that can show to be extraneous in such an algorithm, so a nonzero theoretical ASIC speedup is inevitable. At present, the most important menace to our strategy is probably going some sort of quickly switching FPGA. Nonetheless, there may be an financial argument which reveals that CPUs will survive even when ASICs have a speedup, so long as that speedup is low sufficient; see my earlier article on mining for an outline of a number of the particulars. A doable tradeoff that we must make is whether or not or to not make the algorithm memory-hard; ASIC resistance is tough sufficient because it stands, and memory-hardness could or could not find yourself interfering with that objective (cf. Peter Todd’s arguments that memory-based algorithms may very well encourage centralization); if the algorithm will not be memory-hard, then it might find yourself being GPU-friendly. On the identical time, we’re trying into hybrid-proof-of-stake scoring capabilities as a method of augmenting PoW with additional safety, requiring 51% assaults to concurrently have a big financial part.

With the protocol in an more and more steady state, one other space during which it’s time to begin creating is what we’re beginning to name “Ethereum 1.5” – mechanisms on prime of Ethereum because it stands right this moment, with out the necessity for any new changes to the core protocol, that enable for elevated scalability and effectivity for contracts and decentralized functions, both by cleverly combining and batching transactions or through the use of the blockchain solely as a backup enforcement mechanism with solely the nodes that care a couple of specific contract operating that contract by default. There are a variety of mechanism on this class; that is one thing that can see significantly elevated consideration from each ourselves and hopefully others in the neighborhood.