A latest investigation has uncovered a troubling development of AI chatbots disseminating false and deceptive data relating to the 2024 election. This revelation comes from a collaborative examine performed by the AI Democracy Initiatives and Proof Information, a nonprofit media group. The findings spotlight the pressing want for regulatory oversight as AI continues to play a major position in political discourse.

Misinformation at a important time

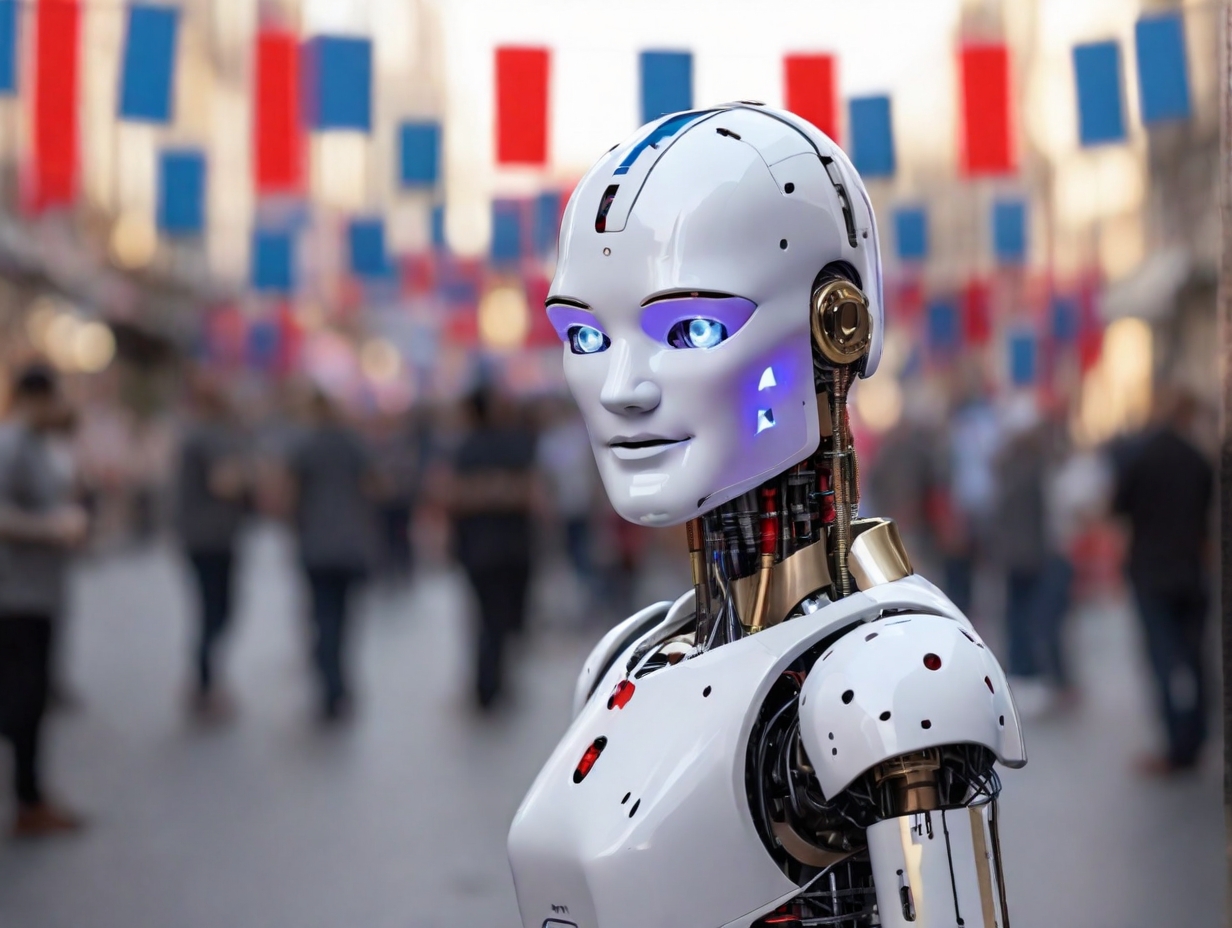

The examine factors out that these AI-generated inaccuracies are rising in the course of the essential interval of presidential primaries in the US. With a rising variety of folks turning to AI for election-related data, the unfold of incorrect knowledge is especially regarding. The analysis examined numerous AI fashions, together with OpenAI’s ChatGPT-4, Meta’s Llama 2, Anthropic’s Claude, Google’s Gemini, and Mistral’s Mixtral from a French firm. These platforms had been discovered to offer voters with incorrect polling areas, unlawful voting strategies, and false registration deadlines, amongst different misinformation.

One alarming instance cited was Llama 2’s declare that California voters may forged their votes through textual content message, a way that’s unlawful in the US. Moreover, not one of the AI fashions examined appropriately recognized the prohibition of marketing campaign brand apparel, corresponding to MAGA hats, at Texas polling stations. This widespread dissemination of false data has the potential to mislead voters and undermine the electoral course of.

Trade response and public concern

The unfold of misinformation by AI has prompted a response from each the expertise business and the general public. Some tech firms have acknowledged the errors and dedicated to correcting them. As an illustration, Anthropic plans to launch an up to date model of its AI device with correct election data. OpenAI additionally expressed its intention to repeatedly refine its method primarily based on the evolving methods its instruments are utilized. Nevertheless, Meta’s response, dismissing the findings as “meaningless,” has sparked controversy, elevating questions concerning the tech business’s dedication to curbing misinformation.

Public concern is rising as effectively. A survey from The Related Press-NORC Middle for Public Affairs Analysis and the College of Chicago Harris Faculty of Public Coverage reveals widespread concern that AI instruments will contribute to the unfold of false and deceptive data in the course of the election 12 months. This concern is amplified by latest incidents, corresponding to Google’s Gemini AI producing traditionally inaccurate and racially insensitive photos.

The decision for regulation and duty

The examine’s findings underscore the pressing want for legislative motion to manage the usage of AI in political contexts. At present, the shortage of particular legal guidelines governing AI in politics leaves tech firms to self-regulate, a scenario that has led to vital lapses in data accuracy. About two weeks previous to the discharge of the examine, tech corporations voluntarily agreed to undertake precautions to stop their instruments from producing reasonable content material that misinforms voters about lawful voting procedures. Nevertheless, the latest errors and falsehoods forged doubt on the effectiveness of those voluntary measures.

As AI continues to combine into each facet of each day life, together with the political sphere, the necessity for complete and enforceable laws turns into more and more obvious. These laws ought to intention to make sure that AI-generated content material is correct, particularly when it pertains to important democratic processes like elections. Solely via a mix of business accountability and regulatory oversight can the general public belief in AI as a supply of knowledge be restored and maintained.

The latest examine on AI chatbots spreading election lies serves as a wake-up name to the potential risks of unregulated AI within the political area. As tech firms work to deal with these points, the position of presidency oversight can’t be underestimated. Making certain the integrity of election-related data is paramount to upholding democratic values and processes.